In this post, I’ll walk you through a serverless application that utilizes Amazon Bedrock, a fully managed foundation model service to build a Q&A style interface. We will explore the following components:

- AWS Lambda for backend serverless compute and logic.

- Amazon API Gateway for exposing a secure API endpoint to the web.

- React.js for a simple front-end that queries the Bedrock model.

- Amazon Titan (through Bedrock) as the underlying foundation model to generate text responses.

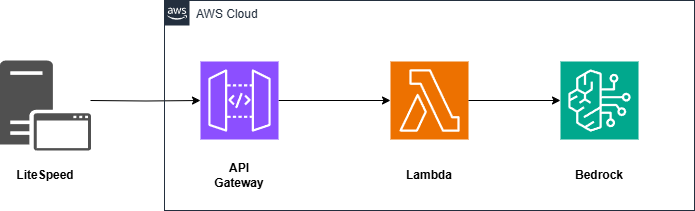

Project Overview

This project answers user questions by sending requests to the Amazon Titan model hosted in Amazon Bedrock. The workflow is:

- User types a question on the frontend.

- Frontend makes a POST request to an API Gateway endpoint.

- API Gateway invokes AWS Lambda, passing the user’s query.

- Lambda calls the Amazon Bedrock endpoint for text generation using the Titan foundation model.

- Amazon Titan processes the query and returns a generated response.

- Lambda returns the model output back to the Frontend

- The Frontend displays the Titan-generated answer to the user.

Here’s a high-level architecture diagram:

The AWS Lambda Function

Below is the AWS Lambda code that queries Amazon Bedrock. It uses the AWS SDK for JavaScript (@aws-sdk/client-bedrock-runtime) to invoke the Titan model:

What’s happening in this code?

- Parse Input: It extracts user_query from the request.

- Prepare Model Payload: Sends the text as inputText with a chosen temperature and topP setting for text generation.

- Invoke the Model: Calls BedrockRuntimeClient and awaits the response.

- Return the Result: Wraps the Titan output in a JSON object for the frontend.

Amazon API Gateway

Create API Gateway, you can configure a simple HTTP endpoint for your Lambda function directly in the AWS console.

Sign in to the AWS Management Console and open the API Gateway console.

Create a new API:

- Click Create API.

- Select HTTP API or REST API (for a simple proxy to Lambda, an HTTP API is usually enough).

- For HTTP API, choose Build.

Configure the API:

- Integration: In the Integrations section, choose Add Integration and select Lambda.

- From the dropdown, select your Lambda function (e.g.,

bedrock-trigger). - For the Integration type, leave it as Lambda.

Set a Route:

- Under the Routes section, define the route where you want to invoke your Lambda. For example:

- Method:

POST - Path:

/bedrock-trigger

- Method:

- Attach the integration (the Lambda function) to this route.

Configure Authorization (Optional):

- If you want to require an API key, Cognito Auth, or JWT authorizer, you can set that up in the Authorization section.

- If you’re just testing, you can leave it open and add an API key later.

Deploy the API:

- Click Next to review and Create or Deploy the API.

- Once the API is deployed, note the Invoke URL in the console. It will look something like:

https://<random-string>.execute-api.<region>.amazonaws.com

Test the Endpoint:

- In the Routes section, test the

POST /bedrock-triggerroute by passing a sample JSON body, e.g.,

{

"user_query": "What is an API?"

}

- You should receive a response from your Lambda.

(Optional) Enable API Keys

If you want to protect your API with an API key:

- In the API Gateway Console, go to Settings or API Keys (depending on your console version).

- Create a new API Key and optionally enable usage plans, throttling, etc.

- Update your route or stage to require this API key.

- In your frontend code, pass this API key as a header:

headers: {

"x-api-key": "<Your-API-Key-Here>",

"Content-Type": "application/json"

}

The Frontend

Below is the Javascript code that sends the query to the API Gateway endpoint, receives the output from bedrock via Lambda and displays it to the user.

How does this work?

- User Query: The question is stored in local state via useState.

- Request Handling: We limit free usage to 3 requests in a single session. A POST request is sent to the API Gateway endpoint.

- Security: An API key is used in the x-api-key header. Note that storing it in front-end code isn’t typically best practice—consider using a more secure approach.

- Displaying the Answer: If the request succeeds, we parse the JSON body and display outputText from the Titan model’s response.

Lessons Learned & Best Practices

- Cost: Experimenting with Models can be expensive so keep in mind that you are usually charged per request so if you have a public facing app or using extensive testing take precautions.

- Serverless: Using AWS Lambda and API Gateway keeps costs low, as you only pay for what you use.

- Security: Storing an API key in the frontend is not ideal for production. Consider a more robust auth layer (e.g., Amazon Cognito or other OIDC providers).

- Monitoring: Leverage Amazon CloudWatch logs and metrics to keep track of usage, latency, and potential errors.

- Bedrock: The Amazon Titan model is a powerful text generation model—be mindful of the CONTENT_FILTERED signal in responses, which indicates Titan’s built-in content moderation might have removed or filtered out some generated text.

Conclusion

By harnessing AWS Lambda for serverless compute, API Gateway to expose an endpoint, React for the frontend, and Amazon Bedrock with the Amazon Titan foundation model, you can rapidly prototype and deploy sophisticated Q&A, text generation, or other generative AI-driven applications.

This example illustrates just one use case, feel free to adapt it for chatbots, summarization, or text scenarios. With Bedrock’s managed approach, you avoid the hassle of provisioning and maintaining your own ML infrastructure and can focus on delivering value to your end users.

Links to code examples and a limited time demo are below